Model Evaluation: Why Accuracy Isn’t Enough

- James Wilkins

- Mar 29, 2025

- 5 min read

Updated: 4 days ago

Suppose we have a model that predicts the colour of a ball. We have 5 red balls and 5 blue balls, and we ask our model to make a prediction of the colour of each of them.

How can we evaluate our model’s success?

N.B. Throughout this article, we’ll refer to red as the positive class and blue as the negative.

The problem with accuracy

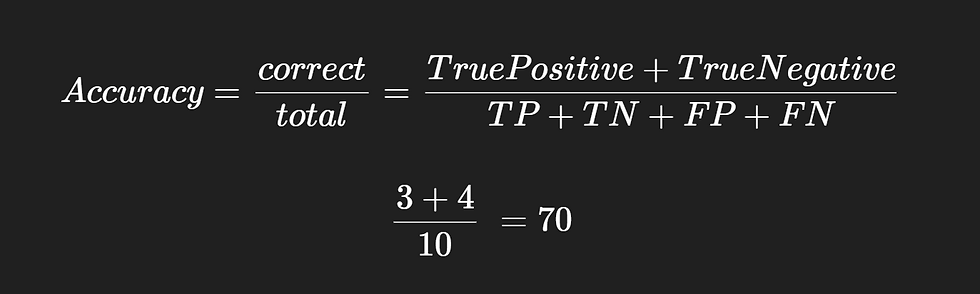

One approach would be to count the number of predictions that our model gets right. We count the number of red balls that the model predicts to be red (number of True Positives) and count the number of blue balls that our model predicts to be blue (the number of True Negatives). We take the sum of these two values as the total number of predictions that the model got correct. Dividing this by the total number of balls gives us the accuracy of the model.

We find that the model has an accuracy of 70%. It made a few mistakes, but hey, nobody’s perfect. We can construct a confusion matrix of these results that tells us a bit more about what our model is doing well and where it falls short.

We can see that we had 2 false positives (a blue that was classified as red) and 1 false negative (a red that was classified as blue).

Let’s try a different set of data. What happens if we have 8 red and 2 blue balls? A different model might make the following prediction:

This time, all the balls were classified as red, and the accuracy was 80%. Better, right? Let’s see what the confusion matrix says…

We can see that while the number of true positives is high, so is the number of false positives. Depending on the context, this could be a problem.

For example, let’s say we are building a model to detect spam emails. If the model identifies everything as spam (lots of false positives), then we’ll end up losing emails that aren’t actually spam!

Equally, if the aim of our model is to screen people for a disease, we don’t want anyone who has the disease to be missed by the model and test negative. In this situation, it would be better to have a lot of false positives and as few false negatives as possible.

This issue is especially important when we don’t have an even balance of positives and negatives in our sample. In the disease screening example, only 2 out of 100 people may have a disease. If our model identifies everybody as negative, it will have an accuracy of 98%. But it’s those two people who do have the disease that we’re really interested in, so really our model is 0% useful!

An alternative approach

Let’s introduce some alternative measures we can use to evaluate our model:

These equations come from looking at different parts of the confusion matrix:

If we maximise the precision of our model, we will minimise the false positive rate. This means we will minimise the number of false alarms.

If we maximise the recall of our model, we will minimise the false negative rate. This means we will minimise the number of positives that go undetected.

We can combine these measures together into one value: an F1 score.

An F1 score is computed as the harmonic mean of precision and recall. This ensures that the precision and recall values are as similar as possible, and therefore maximises both.

Substituting in the definitions of precision and recall, we get the following definition:

Let’s visualise some of this:

We can maximise the precision of this model so that there are no False Positives. The data set is partitioned into points that are definitely positive (class 1) on one side, and any uncertain or negative (class zero) elements are on the other side. This is how we could tune a model for email spam detection, as we want to minimise the number of false alarms.

We can also maximise the recall of the model so that there are no False Negatives. This time, the data set is partitioned into those that are definitely negative and a mixture of positive and negative. This is how we could tune a model for detecting a disease, as it minimises the number of people with the disease who were missed by the test.

In reality, neither of these are appropriate for the situation. If an email spam filter removes some spam, but half of our inbox is still spam emails, it’s not a very good filter. If a test for a disease can tell you you’ve tested negative, that’s helpful, but if many of the people who test positive are actually negative, they could receive treatment they don’t need.

A better solution for both situations would be to find a compromise between them. An F1 score will look halfway between these two and try to make precision and recall as balanced as possible.

An even better solution would be to introduce a bias so we can decide how to balance precision and recall. In practice, this could mean:

We allow a small chance that an important email ends up in spam if it means that our inbox is mostly spam-free

We allow a small chance that a disease test misses an infected person, if it means that a large number of healthy people don’t receive unnecessary treatment

We introduce a new measure (the generalisation of an F1 score) known as an Fβscore:

With β=1, we have the F1 score as before. With β<1, we prioritise precision. With β>1, we prioritise recall. We can fine-tune to find the balance between them depending on the situation.

LEFT: β = 0.5, biased towards precision, RIGHT: β = 1.3, biased towards recall

This allows us to tune our model to classify points as correctly as possible for the given situation, while remaining within an allowable margin of error.

Accuracy might seem like a simple way to judge a model, but it rarely tells the whole story. Different types of errors matter in different ways, and ignoring that can lead to poor decisions. Looking at precision, recall, and Fβ-score gives a clearer picture of how a model actually performs.

Good model evaluation isn’t just about numbers—it’s about making sure the model works where it counts. By using the right mix of metrics and real-world considerations, we can build models that are actually useful.

Comments